Extending Our Tekton Pipeline

This blog post continues my exploration of Tekton. In the first blog post we created a simple pipeline that ran our frontend tests. In this blog post I will show how to set up pods to support our tests. This also serves as a sketch of how to interact with Kubernetes in general.

Recap

We need a non-trivial project so that we can explore difficulties that tend to arise when writing build pipelines. These blog posts will try to recreate the striv master pipeline. Striv is a typical webapp with a Vue-based frontend and a Python-based backend which keeps its state in MySQL or Postgres - in other words a bog-standard single-page application (SPA). Its main Github action contains the following steps:

- Run node unit tests for the frontend (

npm run test:unit) - Run python unit and integration tests for the backend (

pytest) - Build and push a Docker image (

docker buildanddocker push)

We already did step 1, and now it is time for step 2.

Running backend unit tests

The Striv backend tests are mostly straight-forward unit tests, but includes some tests that test the interaction with supported databases, namely MySQL and Postgres. It does not, however provide pytest “marks” to select only the unit test. Instead, there is a JSON config file called testing-databases.conf which controls what databases are tested. Tekton has a standard task write-file that allows us to write an arbitrary file to disk:

kubectl apply -f https://raw.githubusercontent.com/tektoncd/catalog/main/task/write-file/0.1/write-file.yaml

Writing a simple “unit test only” testing-databases.conf looks like this:

- name: write-testing-databases-conf

runAfter: [checkout]

taskRef:

name: write-file

kind: Task

workspaces:

- name: output

workspace: striv-workspace

params:

- name: path

value: testing-databases.json

- name: contents

value: '[["sqlite", {"database": ":memory:"}]]'

We can now use the standard pytest task to run our tests.

kubectl apply -f https://raw.githubusercontent.com/tektoncd/catalog/main/task/pytest/0.1/pytest.yaml

The actual pytest task is quite straight-forward:

- name: test-backend

runAfter: [write-testing-databases-conf]

taskRef:

name: pytest

kind: Task

workspaces:

- name: source

workspace: striv-workspace

params:

- name: PYTHON

value: "3.7"

- name: REQUIREMENTS_FILE

value: requirements-dev.txt

Integration tests

However, we do want to run those MySQL and Postgres tests! So how are we going to get databases up? There are at least two different strategies to provide supporting resources to your tests:

- Use sidecars. Tekton has explicit support for running sidecars to Tasks.

- Deploy Kubernetes resources (i.e. pods) to support our integration tests.

Sidecars sound tempting, but has two problems. First, they can only be used by task definitions and not from a pipeline. That would mean that we could not use the standard pytest task above, but would have to copy it and define our own task that includes the sidecars. Second, we are likely to run into readiness issues and would have to include an explicit script step to wait for the database sockets to become available. These issues are surmountable and had I been at work, I would probably have taken this route.

But this is a blog post, and we are here to learn Tekton. Deploying a set of Kubernetes resources to provide those databases is a much more generally applicable technique, so let’s see what it takes to do that!

Deploying Kubernetes resources

We can use the standard kubernetes-actions task to deploy resources to a Kubernetes cluster.

kubectl apply -f https://raw.githubusercontent.com/tektoncd/catalog/main/task/kubernetes-actions/0.2/kubernetes-actions.yaml

For the sake of simplicity, I’m inlining the manifest in the task. I could equally set it up as a ConfigMap or mount the striv-workspace and reference a file from the GitHub repository (though we would have had to resort to e.g. sed to inject parameters).

- name: deploy-test-dependencies

taskRef:

name: kubernetes-actions

kind: Task

params:

- name: script

value: |

kubectl apply -f - <<EOF

apiVersion: v1

kind: Service

metadata:

# Unique name so it won't collide with concurrent runs

name: mysql-$(context.pipelineRun.uid)

labels:

# So we can delete both this and Postgres

test-dependencies: $(context.pipelineRun.uid)

spec:

ports:

- name: mysql

port: 3306

targetPort: mysql

selector:

mysql: $(context.pipelineRun.uid)

---

apiVersion: v1

kind: Pod

metadata:

name: mysql-$(context.pipelineRun.uid)

labels:

# So we can delete both this and Postgres

test-dependencies: $(context.pipelineRun.uid)

# So the mysql service can find only this pod (and not Postgres)

mysql: $(context.pipelineRun.uid)

spec:

containers:

- name: mysql

image: mysql:5.6

ports:

- name: mysql

containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: $(context.pipelineRun.uid)

- name: MYSQL_DATABASE

value: striv_test

EOF

kubectl wait pods -l mysql=$(context.pipelineRun.uid) --timeout=30s --for condition=ready

Note that this task has no runAfter key. Test resource creation will begin immediately when the pipeline is run, which means that it is very likely that they will be ready by the time we run the backend tests (checking out the GitHub repository and then installing the Pip dependencies takes well over a minute). Likely enough that we can ignore the issue of checking for readiness. One reason for not addressing this issue is that the standard MySQL container restarts mysql once as part of its setup. This means that this check gets quite complicated since even succesfully connecting to port 3306 will not guarantee that MySQL is ready to serve our requests.

A very similar entry will be needed for Postrgres 11, but that is left as an exercise to the reader.

The configuration file injected by write-testing-databases-conf will need updating to include MySQL:

- name: write-testing-databases-conf

runAfter: [checkout]

taskRef:

name: write-file

kind: Task

workspaces:

- name: output

workspace: striv-workspace

params:

- name: path

value: testing-databases.json

- name: contents

value: |

[

["sqlite", {"database": ":memory:"}],

[

"mysql",

{

"user": "root",

"password": "$(context.pipelineRun.uid)",

"host": "mysql-$(context.pipelineRun.uid)",

"database": "striv_test",

"create_database": true

}

]

]

Tearing down the testing resources

Once the test run is finished, we want to drop the database pods again. We could just add a normal task with runAfter: ["test-backend"], but that might trip us up in the future when we add more tasks to our pipeline. Better to drop them as the very last activity (plus we get to explore another Tekton feature). Tekton provides a finally section for this scenario where you can add tasks that should be run at the end of the pipeline, regardless of success.

finally:

- name: delete-test-dependencies

taskRef:

name: kubernetes-actions

kind: Task

params:

- name: script

value: |

kubectl delete pods -l test-dependencies=$(context.pipelineRun.uid) --timeout=30s

kubectl delete services -l test-dependencies=$(context.pipelineRun.uid) --timeout=30s

Running the pipeline

If we were to run the pipeline now, the deploy-test-dependencies task would fail with a “Forbidden” error message, because it does not have permission to interact with our Kubernetes cluster. Tekton pipelines normally use the “default” service account, which has very few permissions. We can assign a different account, either to the whole pipeline, or to an individual task. This pipeline draws code from several different sources (e.g. Docker Hub, GitHub, PyPI and npmjs.org) so there is plenty opportunity to sneak in malevolent code. It therefore makes sense to grant permissions only to the necessary tasks.

First, we need to create this service account and give it some permissions. For the sake of completeness, we create a role for it as well; in a production scenario, you would probably have a reasonalbe group or role available already.

apiVersion: v1

kind: ServiceAccount

metadata:

name: test-dependencies-deployer

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: test-dependencies-deployer

rules:

- apiGroups: [""]

resources: [pods, services]

verbs: [create, delete, get, list, watch]

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: test-dependencies-deployer

subjects:

- kind: ServiceAccount

name: test-dependencies-deployer

roleRef:

kind: Role

name: test-dependencies-deployer

apiGroup: rbac.authorization.k8s.io

Finally, we need to tell Tekton that the two test dependency tasks should use this service account. This is part of the PipelineRun resource and looks like this:

apiVersion: tekton.dev/v1beta1

kind: PipelineRun

metadata:

name: deploy-striv-run-2

spec:

pipelineRef:

name: deploy-striv # We want to run this pipeline

serviceAccountNames:

# Tasks to set up and tear down test dependencies need to use a service account

- taskName: deploy-test-dependencies

serviceAccountName: test-dependencies-deployer

- taskName: delete-test-dependencies

serviceAccountName: test-dependencies-deployer

workspaces:

- # This describes how to provide the workspace that the pipeline requires

name: striv-workspace

volumeClaimTemplate:

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

The updated pipeline is here if you want to test it yourself.

kubectl apply -f https://bittrance.github.io/extending-our-tekton-pipeline/deploy-striv-extended.yaml

kubectl apply -f https://bittrance.github.io/extending-our-tekton-pipeline/deploy-striv-run-2.yaml

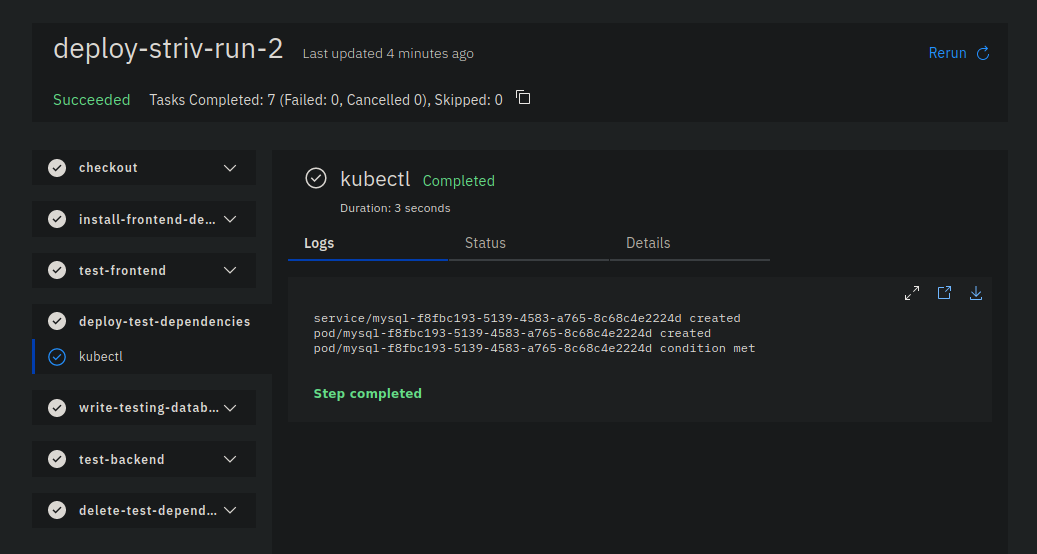

At last, we can apply this pipeline run and get a successful test run:

Next step

We now have a complete testing pipeline. To make this a complete continuous delivery pipeline, it needs to build and deploy the Docker container that is the main artifact. However, that should happen only from some branches, so first we need to set up a trigger so that GitHub informs us when and what changes are pushed to the striv repository. More on this in the next blog post.